I have cloned an ELK cluster with all its data and configurations. I recreated the cluster with the command “elasticsearch-node unsafe-bootstrap”, and I applied the following command to the other node to take it out of the old cluster and put it into the new one “elasticsearch-node detach-cluster”. I also recreated the security index with the new settings with the “securityadmin.sh” script.

Up to this point everything went fine. I have the new cluster up and running with no errors.

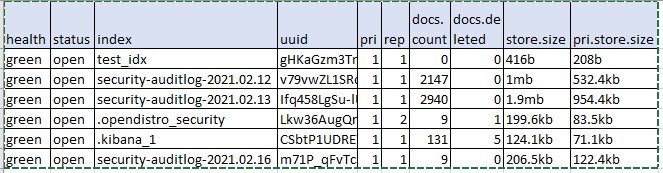

When I log into Kibana, I can see the new indexes correctly.

GET /_cat/indices?v

The problem is that all the data from the previous cluster is still there, taking up unnecessary space. The new cluster doesn’t see that data, at least the index data.

GET /_cat/allocation?v&pretty

shards disk.indices disk.used disk.avail disk.total

6 337kb 32,4gb 10,5gb 49,9gb

6 1.7mb 16,2gb 26,7gb 42,9gb

Notice that in image one, the indexes that ELK has occupy less than 1Mb, however, in this directory there are more than 30GB occupying unnecessary space.

How can I clean up the data that ELK is not using? I don’t need it at all. As far as I’m concerned they could be deleted without any problems.

Thanks